Data is driving the healthcare industry, which is currently responsible for around 30% of the world’s data. By 2025, healthcare data is expected to grow at a compound annual rate of 36%, outpacing industries like financial services by 10% and media & entertainment by 11%.

In an exclusive interview with Dr. Benjamin Gmeiner, Head of Medical Data Strategy & Science at Novartis, we delve into the benefits and challenges of leveraging data in healthcare, while discussing possible solutions to address these obstacles.

Dr. Benjamin Gmeiner is a recognized expert in data science and healthcare innovation. As the Director of Medical Data Strategy & Science at Novartis, he leads initiatives to harness health data for advancing clinical research, enabling precision medicine, and improving patient outcomes.

As former Harvard researcher and author of numerous scientific publications, Benjamin combines a passion for innovation with a proven track record of impact. He is a sought-after speaker at global healthcare and pharmaceutical conferences.

Big data has the potential to revolutionize healthcare, unlocking transformative opportunities to enhance patient outcomes, streamline healthcare delivery, and drive advancements in medical research.

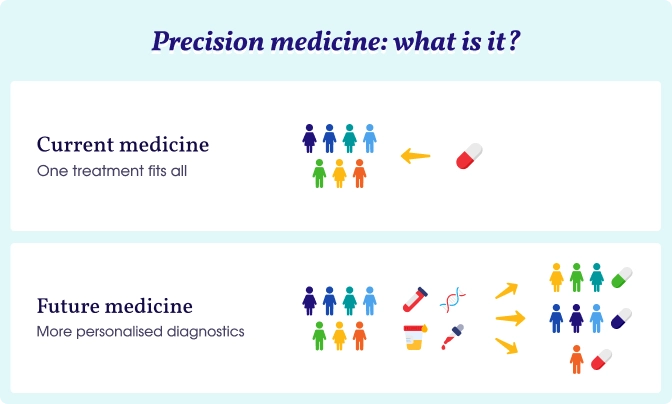

One of the most exciting benefits of big data in healthcare is its potential to deliver personalized treatments.

Data science and artificial intelligence (AI) play crucial roles in understanding individual patient needs. This enables the creation of tailored treatment strategies, which can lead to optimized health outcomes.

Benjamin added:

Every person is different, and hence every person needs a different treatment. People from Asia, for example, differ from those in Germany, or Spain — not just genetically, but also in terms of lifestyle. Factors like sun exposure vary significantly, which can influence health outcomes, such as the risk of skin cancer. These differences highlight the complexity of tailoring healthcare to diverse populations.

Big data allows researchers to capture vast amounts of information from diverse patient populations, including genetic data, lifestyle factors, and environmental conditions. This information can be used to create personalized treatment plans optimized for each patient, which is particularly important in precision medicine.

Big data is also enabling major advancements in clinical trials and drug development. AI and machine learning can help researchers predict patient responses to treatments and generate hypotheses for new therapies. By analyzing large datasets, AI can identify patterns and trends that might not be apparent in traditional clinical trials.

In a clinical trial, participants are typically divided into groups, with one receiving the innovative medicine and another receiving standard care or a placebo. By comparing these groups, we aim to demonstrate the new treatment’s safety and efficacy. However, the data generated extends far beyond the few thousand participants in a trial; it encompasses hundreds of thousands of individuals in real-world settings, each with diverse health conditions and medication regimens. This is where big data combined with AI offers benefits in clinical research by enhancing the depth and scope of analysis.

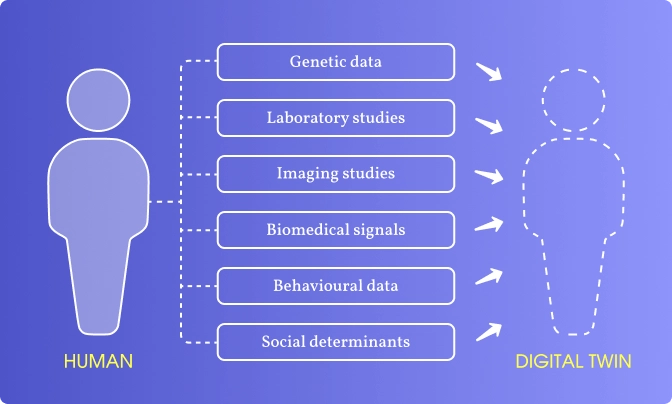

Benjamin says he is excited about the use of “digital twins” in clinical trials — simulations that predict how a patient might respond to standard care based on existing data.

Going fast forward, like 10 to 20 years from now, no control groups should be needed anymore. Every participant in a trial will receive the innovative treatment from the outset, accelerating access to new therapies for everyone. These developments in AI and machine learning are not just promising; they are reshaping the landscape of healthcare research and treatment delivery.

Another key benefit of using data in healthcare is its ability to identify at-risk patients, allowing for timely interventions. By examining patterns in large datasets, healthcare professionals can gain new insights into the risk factors that contribute to disease development. This enables a more proactive approach to patient care, as providers can engage with individuals on a more personal level, incorporating data from mobile health applications and connected devices.

These insights can be analyzed in real-time, allowing for timely interventions that prompt behavioral changes to reduce health risks and optimize overall health outcomes. The convergence of AI, machine learning, and big data ultimately empowers healthcare providers to enhance diagnostic accuracy and improve patient care.

While computers are unable to substitute the essential judgment of human physicians, they can significantly enhance the work of clinicians by analyzing extensive healthcare data.

Advanced algorithms are specifically designed to predict the most probable diagnoses for a patient’s condition, aiding physicians in making quicker, more informed decisions. Furthermore, they can suggest targeted tests to verify a diagnosis, ensuring that patients receive the most suitable care for their individual situations.

While the potential advantages of big data in healthcare are immense, there are considerable challenges to overcome. Statistics by Gartner shows that 97% of data generated by organizations is unused. So what are the problems with it?

One of the biggest challenges in healthcare is collecting diverse and representative data. Human populations vary significantly in terms of genetics, lifestyle, and environmental factors, all of which can influence disease risk and treatment outcomes. Capturing this complexity is essential for developing effective data analytics models.

Dr. Benjamin Gmeiner says:

The challenge lies in the fact that human diversity must be accurately represented in the data used to train algorithms and derive insights. This means that the data needs to come from a variety of sources and capture a variety of different settings and people. Without comprehensive and diverse data, the potential for developing tailored treatments is significantly limited.

To overcome this challenge, the first step is to foster collaboration and eliminate data silos. Usually, data is fragmented across various stakeholders, each holding their own piece of information. To truly harness the power of big data in healthcare, it’s essential to unite these disparate data sources. Collaboration among stakeholders is key; when everyone works together, the combined insights can be far more valuable than individual datasets.

This approach requires a willingness from all parties, including the pharmaceutical industry, to share their data. While concerns about sensitivity and intellectual property must be addressed, there is a wealth of information that can benefit everyone involved. By pooling our data resources and enhancing connectivity, we can create a more comprehensive data landscape. This collaborative effort will ultimately lead to richer insights and more effective solutions in healthcare.

Another significant hurdle is ensuring the high quality of data. While clinical trial data is often meticulously collected and audited, real-world healthcare data can be messy and inconsistent.

If you look, for example, at lab tests, the quality is different. Every lab is doing it differently a little bit, using different assays. As a result, there are a lot of pitfalls, missing values, and inconsistencies across hospitals and countries. So this needs to be taken care of.

To address this challenge, it’s essential to assess the quality of the data thoroughly. This involves identifying any gaps or inconsistencies and ensuring that the information is accurate and reliable. Engaging subject matter experts, such as clinicians, is crucial for conducting checks to verify the data’s integrity. Additionally, employing the right technical tools for data imputation can help fill in missing values and enhance overall data quality.

Data privacy is one of the most critical challenges in healthcare, particularly in regions like Europe, where stringent regulations such as the General Data Protection Regulation (GDPR) apply.

That's what our expert thinks:

It’s utmost important that the data privacy is preserved. And this is our highest bar, under no circumstances, to put any data privacy in danger. So this is a very hard criterion. And it needs to be safe. Making sure that the data privacy of every single person is preserved is super important.

Balancing the need to share data for research purposes while ensuring individual privacy is protected is a delicate and complex task. To mitigate risks, Novartis and other pharmaceutical companies work closely with regulators, legal experts, and data privacy officers to ensure compliance. They also work on the anonymization of patient data, but even anonymized data can carry the risk of re-identification, especially when combined with other datasets.

Data silos are another challenge hindering the use of big data in healthcare. In many cases, hospitals, research institutions, and pharmaceutical companies store their data separately, making it difficult to collaborate and derive insights.

Quoting the words of Benjamin Gmeiner:

The current healthcare data landscape is fragmented, with systems lacking the ability to share information effectively. Hospitals are connected to the internet, but their systems were not originally designed for data sharing, as this need wasn’t anticipated. To improve interoperability, it’s essential to establish the right infrastructure that enables these systems to communicate with one another.

Initiatives like the European Health Data Space (EHDS) and the Interoperable Europe act will facilitate the exchange of data for the delivery of healthcare. Additionally, advancements in AI and large language models can help integrate data sets and resolve interoperability issues gradually.

As the demand for real-world data grows, the current system of siloed, fragmented data, and sluggish partnerships struggle to keep up. Additionally, strict regulations regarding patient privacy and data security further complicate data sharing and access. As a result, valuable insights that could improve patient outcomes and streamline healthcare processes may remain untapped. However, Benjamin Gmeiner believes that this challenge will be addressed in the near future.

In Germany, significant progress has been made to improve access to comprehensive datasets. One key initiative is the Forschungsdatenzentrum, a centralized hub where vast amounts of health data are consolidated — from health insurance records to electronic patient records and cancer registries — allowing researchers to use this data for scientific purposes.

This progress will drive innovations in artificial intelligence, precision medicine, and clinical trials, significantly advancing the understanding of life pathways and improving healthcare outcomes.

Clearly, big data holds immense promise for revolutionizing healthcare, from enabling personalized treatments to improving the efficiency of clinical trials. However, significant challenges related to data collection, quality, privacy, and infrastructure must be addressed to fully realize its potential.

By fostering collaboration, advancing technical capabilities, and ensuring strong regulatory frameworks, the healthcare industry can harness the power of big data to improve patient outcomes and accelerate medical innovation. As Benjamin highlighted, the journey toward a data-driven healthcare system is complex but filled with opportunities. With the right strategies in place, big data can truly transform healthcare for the better.

Attico is a Drupal development company with extensive experience in the healthcare industry. We develop digital solutions for hospitals, clinics, and other medical service providers, focusing on data security, accessibility, and strict adherence to regulatory standards like GDPR, HIPAA, and PIPEDA.