QA metrics help teams measure product quality and process health. This article explores which metrics matter most and how to use them efficiently.

When people talk about quality assurance, they usually imagine skilled testers clicking through interfaces, running scripts, and catching unexpected issues before they reach customers. But behind every confident release, behind every stable deployment and every successful regression cycle, there’s something far less glamorous but absolutely essential: metrics.

Metrics transform quality from a vague feeling into something you can see, measure, question, and improve. They expose weak points, reveal strengths, and quietly show teams whether the things they believe about their process are actually true.

This article explores the essential QA metrics to track — the quality metrics in software testing that show not only how healthy your product is, but how healthy your processes are. Metrics aren’t bureaucracy. They’re honesty. For many teams, the real breakthrough happens when they stop relying on gut feeling and start working with a focused set of criteria, turning every release decision into a data-backed choice rather than a guess.

In most modern teams, releases move fast. New features land weekly, integrations shift constantly, and customers expect instant stability. Teams can’t rely on intuition to maintain quality anymore.

“Metrics translate quality into clear, objective indicators. Without them, everything becomes subjective — developers guess how stable the release is, managers assume all critical paths were checked, and stakeholders hope no unexpected surprises appear after deployment.”

Metrics ground all of that in reality.

They reveal:

Without metrics, teams see only symptoms. With metrics, they can finally see causes. And this is exactly why modern teams rely on structured QA metrics — without them, even mature development pipelines struggle to maintain consistent quality.

Although QA teams often maintain dozens of measurements, only a few define the backbone of overall quality. The two most essential metrics, the expert called the “true pulse” of QA, are Defect Containment Efficiency and Defect Leakage.

These two indicators are among the most widely used quality metrics in software testing, forming the baseline for understanding how reliably a team can intercept defects before they reach users.

Defect Containment Efficiency (DCE) shows how effectively a particular testing stage “catches” defects and prevents them from leaking into the next stage. Often, the next stage means a release, but it doesn’t have to be — that’s just the most common case.

It’s easy to underestimate the emotional weight of this metric. DCE isn’t just a number — it’s the clearest demonstration of whether the testing process is doing its job. When DCE is high, customers rarely encounter serious issues. When DCE falls, trust starts to erode.

“Defect containment shows whether the team managed to prevent late-stage issues. When this number is healthy, the client never even learns how many problems were caught early.”

A strong DCE — usually above 95% — means the team prevented most defects from slipping out. But when DCE dips toward 70% or lower, it indicates serious gaps:

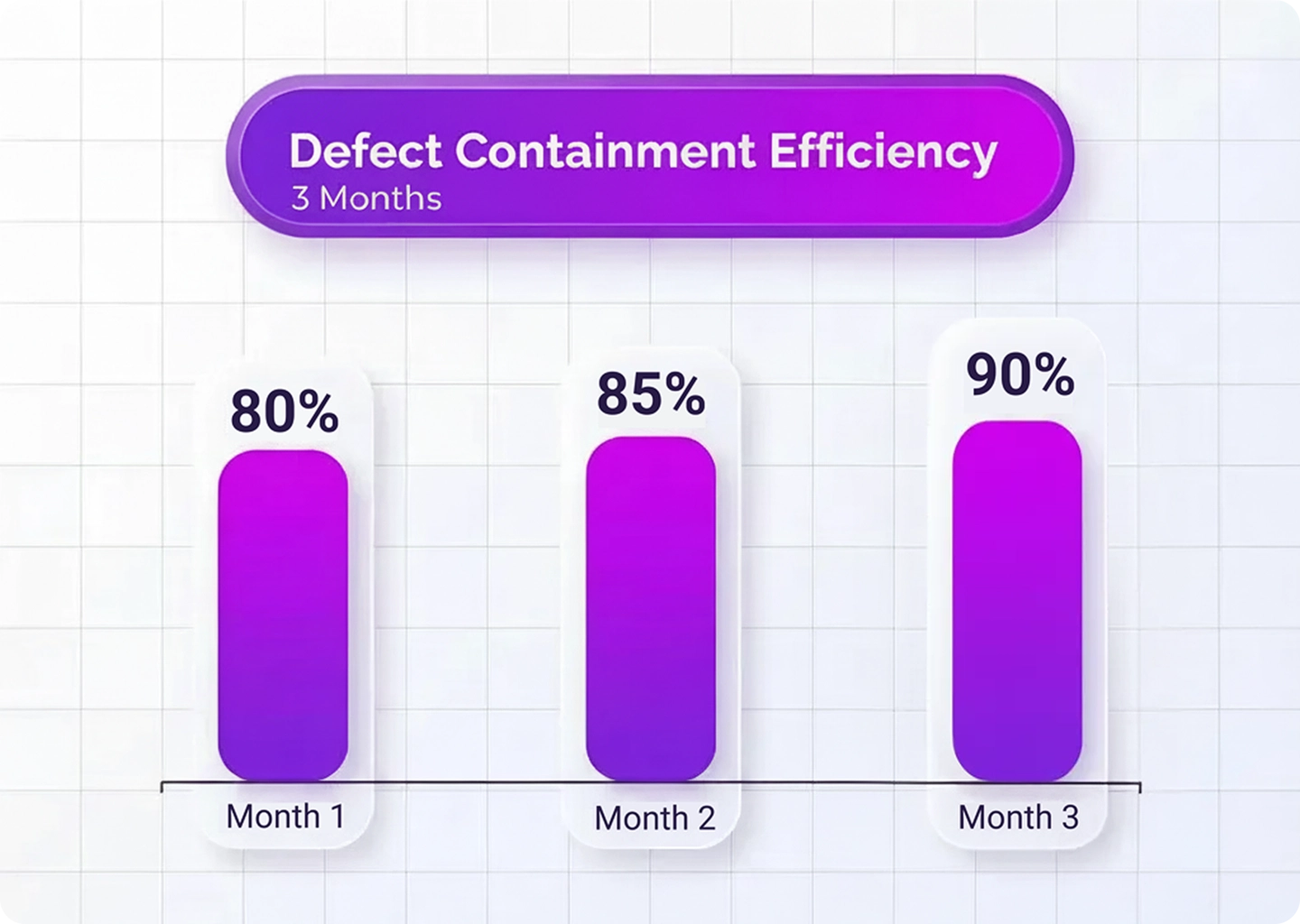

On one long-running project, DCE improved dramatically across a three-month period. The expert shared that after refining regression test suites, adjusting the testing strategy, and stabilizing automation, the number of production issues dropped noticeably.

Clients immediately felt the difference, even though they didn’t know the numbers. That’s the invisible power of containment: customers only notice it when it’s missing.

If Defect Containment Efficiency shows what went right, Defect Leakage (DL) reveals what went wrong.

Defect Leakage measures how many bugs escaped into production — the ones users actually encountered.

“Leakage is the measure no one wants to see go up. It shows the blind spots in your testing process — the issues you didn’t think to check, or didn’t check deeply enough.”

Leakage is more than a technical problem; it’s a trust problem. When clients repeatedly find bugs themselves, they start questioning:

Leakage becomes especially damaging when the escaped defects affect core business pathways — checkout flows, authentication, payments, or dashboards. One bug in a low-traffic admin interface might be barely noticeable, but one bug in ecommerce checkout can affect thousands of users in minutes.

Monitoring DL, along with severity distribution, helps teams understand not only how often they miss issues but also how critical those misses are.

A low DL percentage indicates the QA team’s processes are working effectively. A rising DL trend is a warning bell that teams should never ignore.

Defect Density looks at where defects accumulate — by component, module, functional area, or the entire system. Talking about the entire system, the metric helps to assess the level of code defectiveness, and in the context of modules, to identify the modules that are most prone to errors.

“Density metrics show which modules are risk magnets. When defects cluster, it usually means deeper systemic issues.”

Clusters often point to:

Teams use Density to guide regression testing efforts. Instead of testing every path equally, which is inefficient, they apply more attention to the modules with high defect volume.

When a module repeatedly appears on Density charts, it’s rarely a coincidence. It’s a signal: something inside that component needs attention beyond bug fixing — perhaps architectural cleanup, refactoring, or redesign.

Some metrics do not reflect the product directly. They reflect the context in which QA operates; and that context often determines whether QA succeeds or struggles.

These are the process metrics teams often overlook, yet they dramatically influence leakage, containment, and overall quality. Without these process-oriented metrics, even a strong testing strategy on paper can fail in practice because the surrounding delivery environment works against it.

This metric shows how much planned work the team actually finished in a sprint.

When there’s a large gap between committed and completed work, QA often pays the price:

Poor metric performance may also indicate a problem in the QA process, often caused by poor planning, incorrect estimates, scope creep, bottlenecks, or a spillover — the accumulation of tasks from sprint to sprint.

“When development unpredictability increases, QA workload becomes chaotic too. Many teams blame bugs on QA, but the root cause is often upstream instability.”

By monitoring velocity trends, teams can understand whether testing time is being protected or squeezed.

Scope Creep measures how much unplanned work enters the sprint.

“Unplanned work disrupts QA most of all. It changes priorities mid-flight and spreads testers thin.”

When Scope Creep is high:

Scope Creep isn’t just inconvenient — it’s one of the biggest drivers of defect leakage.

When QA receives a predictable flow of work, quality rises naturally. When surprises dominate the sprint, quality becomes a gamble. In fact, many organizations underestimate Scope Creep as a part of their process metrics, even though its influence on release stability is often dramatic. Many of the most reliable quality metrics correlate directly with the stability and predictability of the development pipeline.

These two metrics don’t measure product defects — they measure the effectiveness of problem correction and prevention.

Invalid defects occur when QA logs issues that later turn out to be non-bugs — duplicates, misinterpretations, missing requirement context, or environment issues.

“A high invalid rate usually means the QA team doesn’t have enough clarity — either documentation is missing or communication is weak — and knowledge of how the system works.”

When the invalid rate reaches 15–20%, it’s a serious red flag. It shows:

On one project, the expert described, this metric dropped steadily over several months as the team clarified documentation and improved cross-functional communication. The difference was dramatic: fewer misunderstandings, fewer confused tickets, smoother workflows.

Reopened defects appear when bugs marked “fixed” reappear or fail retesting.

“A high reopen rate often means the fix didn’t address the root cause or lacked proper regression around it.”

The common causes include:

A high reopen rate erodes confidence inside the team and signals that deeper process adjustments are needed.

Coverage metrics, a core category within today’s QA metrics landscape, aren’t about perfection; they’re about awareness.

“Coverage shows what we’ve touched and what we haven’t.”

Coverage metrics include:

Unit tests form the first line of defense. They catch defects early, before the product even reaches QA.

Automation coverage then acts as a protection net during regression. High-quality automation allows QA to spend less time checking repetitions and far more time on exploratory and high-risk testing.

A balanced coverage strategy recognizes that automation isn’t about covering everything — it’s about covering the areas where failure would be catastrophic, high-frequency flows, and scenarios that are too expensive for manual execution.

One of the most important points the expert emphasized repeatedly was the importance of examining metrics as trends rather than isolated snapshots. Trends tell the story, but short-term metrics should not be ignored. They might provide the early warnings we need to intervene and resolve issues before they escalate.

“A single month can not show much, but one to three months will show a real story.”

Viewed over several months, these quality metrics in software testing tell teams:

(And keep the bullet list after it.)

The difference between a chaotic process and a predictable one is rarely visible in one sprint. It’s visible over time.

Metrics aren’t just internal tools — they have a huge impact on how clients perceive the team’s competence.

Every time a defect escapes to production, every time a hotfix disrupts the sprint, and every time the client uncovers an issue before the team does, trust erodes.

“Clients judge stability by their experience. If they repeatedly see bugs, they stop believing in the team’s process.”

Strong metrics — and especially strong trends — reinforce the opposite:

Clients don’t need to see the numbers to feel the results. Stability communicates itself. This is why teams that consistently monitor their quality metrics tend to earn deeper client trust — the stability becomes evident long before the client sees any dashboard.

The expert shared several examples from large projects where metrics informed strategic decisions.

After implementing better regression routines and cleaning automation suites, Defect Containment increased noticeably. Production issues fell, and the client immediately gained confidence in the release cadence.

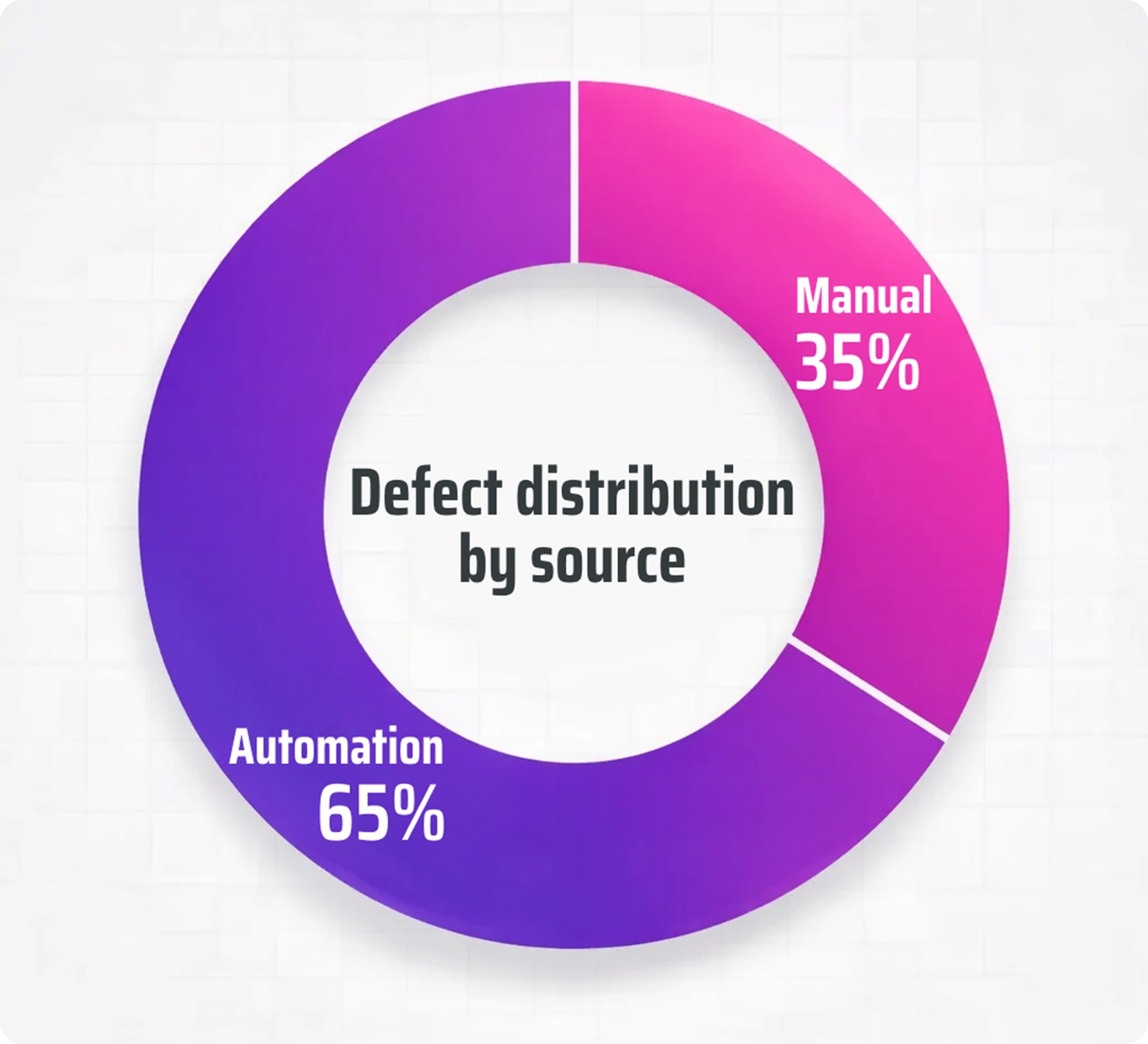

In one long-running system, automated tests originally caught about 40% of total bugs. After half a year of refinement, automation began catching 65% of defects, dramatically reducing manual workload and speeding up verification cycles.

As documentation and communication improved, false positives decreased. QA began filing fewer incorrect tickets, saving time across the entire team and creating smoother developer–tester collaboration.

These cases show that metrics aren’t cosmetic. They’re diagnostic — and when acted upon, transformative.

Great QA cultures aren’t built on heroics. They’re built on clarity.

Metrics give teams that clarity. They illuminate what’s working, expose what isn’t, and help everyone make decisions based on data rather than assumptions.

“Metrics don’t fix anything on their own. But they tell you exactly where to focus your energy.”

Teams that use metrics well:

If your team needs help designing a practical set of QA metrics and integrating them into your delivery workflow, you can always turn to Attico’s quality assurance services to build a metrics-driven testing approach that fits your product and release cadence.

There is one more dimension of QA metrics that often goes unnoticed: their effect on the team itself. Changes made based on the metrics lead to positive changes. Testers stop drowning in repeated checks. Developers notice fewer interruptions and context switches. Product owners see features stabilizing earlier. All of this gradually builds a sense of control instead of chaos.

The expert notes that “teams become calmer and more confident when they see progress reflected in data.” Small improvements stop feeling abstract — they show up in charts, dashboards, and sprint reviews. That feedback loop reinforces good habits, motivates teams to refine their testing strategy, and reduces the emotional fatigue that comes from unpredictable releases. In other words, metrics don’t just measure quality — they help people feel that quality is achievable.

Quality is never static. It shifts constantly with new features, new dependencies, new integrations, and new expectations. To keep up, teams need more than intuition — they need visibility. That visibility comes from software quality metrics that help teams observe patterns, catch regressions early, and avoid quality decay over time.

Metrics such as Defect Containment, Leakage, Defect Density, Invalid Defects, Reopened Rates, Coverage, Velocity, and Scope Creep together paint a complete picture of product health and process stability. Observed over time, they become a clear map of where quality is improving and where risk is growing.

“Metrics show you the truth. And once you see the truth, it becomes possible to improve without guessing.”

In a world where clients expect seamless digital experiences, teams that measure well inevitably build better software — and stronger trust.